Famous technoblogger Marquez Brownlee, also known as MKBHD, published The Blind Smartphone Camera Test Winners! / Marques Brownlee [Video] the results of the traditional blind comparison of cameras of popular smartphones. To do this, Marquez's team took 20 smartphones and took 3 photos of the blogger: in daylight, in low light and in portrait mode.

To make the test fair, the blogger updated each smartphone to the latest version of the software, wiped the lens lenses and charged them to 100% so that the shooting was not affected by energy saving mechanisms. During the tests, only automatic settings were used — after all, this is how most users shoot.

Further, metadata was deleted from all 60 photos so that it would be impossible to find out what they were taken for, and uploaded to the voting site. On it, participants were shown 2 images from random smartphones at a time and asked to choose which one they liked more. Such a test made it possible to collide all smartphones with each other and use the Elo rating system (it is used in chess) to calculate the relative "strength" of a smartphone's camera. Here are the results.

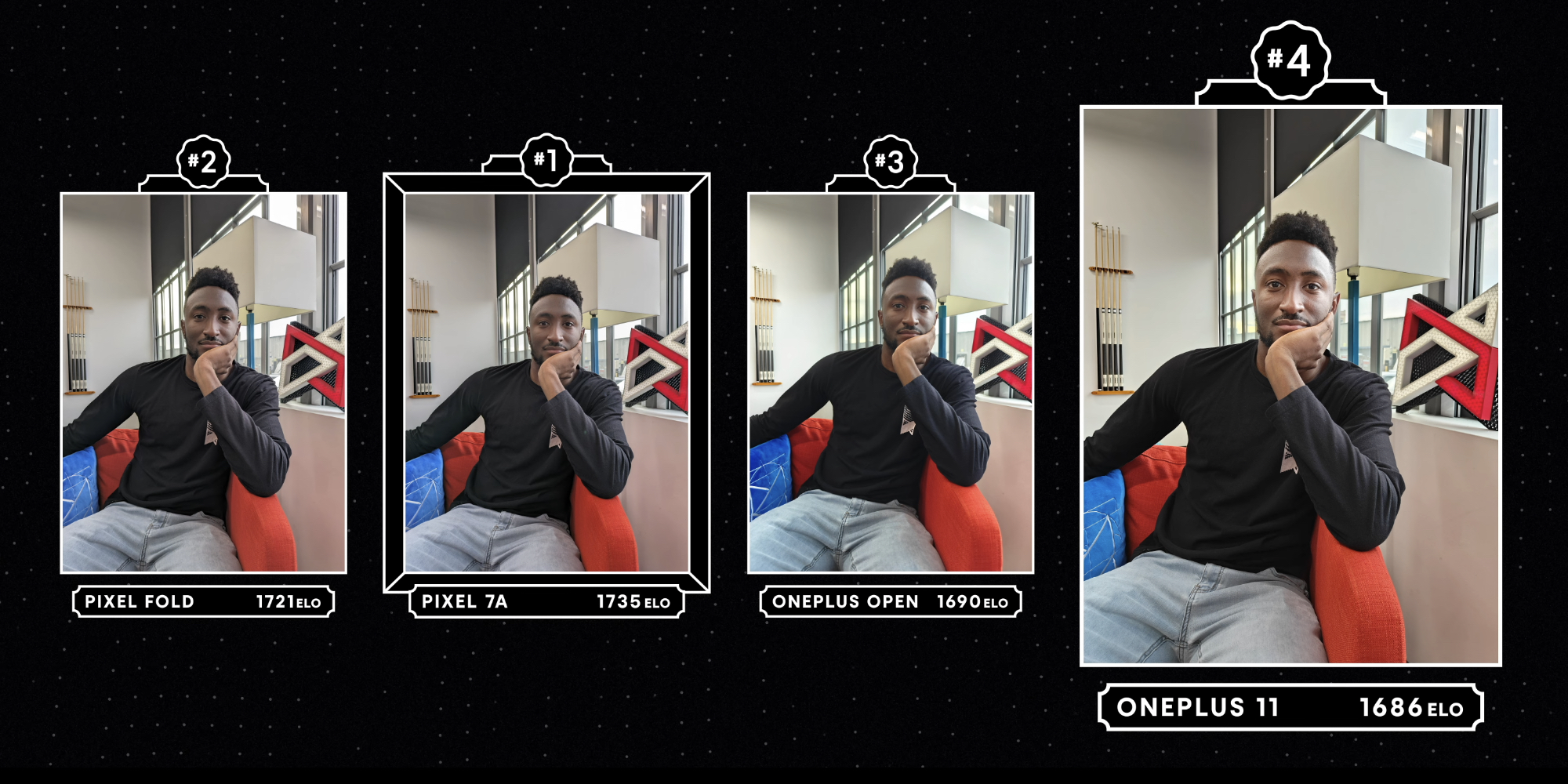

In the daylight test, the winner was Pixel 7a, a mid—range Google smartphone. The next thing is more interesting: the second and third places are taken by the folding Pixel Fold and OnePlus Open, respectively.

Marquez himself, in a blind test, rated OnePlus 11 the highest, which, according to the results of calculations, took 4th place.

It is noteworthy that the last place was iPhone 15 Pro, the frame from which turned out to be darker than the rest. But this does not mean that users need the lightest pictures: in 19th place out of 20 was the GalaxyS23 Ultra, which, on the contrary, increased the exposure too high.

This stage is similar to the scenario of a sports movie: the loser from the daily test of the iPhone 15 Pro came out on top, followed by the Pixel 8 Pro and Pixel 7a. All three showed an excellent example of night shooting without overexposure.

What kind of overexposure? From too aggressive HDR, as in the photos from OnePlus Open, Zenfone 10 and Oppo Find X6 Pro. OnePlus, it seems, first lightened all the photos, then using a neural network found the sky and darkened it back. A small "aura" of HDR is also visible in the photo from the Galaxy S23 Ultra, but not nearly as much as this trio.

The portrait mode works differently for tested smartphones: all simulate different focal lengths and zooms, for a more accurate comparison, photographers selected the distance so that the composition in the pictures was similar. The Pixel 8 Pro turned out to be the popular leader, followed by the Galaxy Z Fold 5 and the iPhone 15 Pro. Marquez noted that the background blur looks most realistic on the iPhone. But in general, all three leaders showed decent and not overloaded with processing results.

After individual tests, it's time to take stock and choose the best camera from the tested ones. To do this, the rating of each smartphone in three tests was averaged and distributed in places from 1 to 20. The top three turned out to be unexpected: Pixel 7a has the best result, followed by Pixel 8 Pro with a minimum margin, and Pixel Fold is in third place. Sony's camera phone turned out to be the worst. Here's what the whole rating looks like:

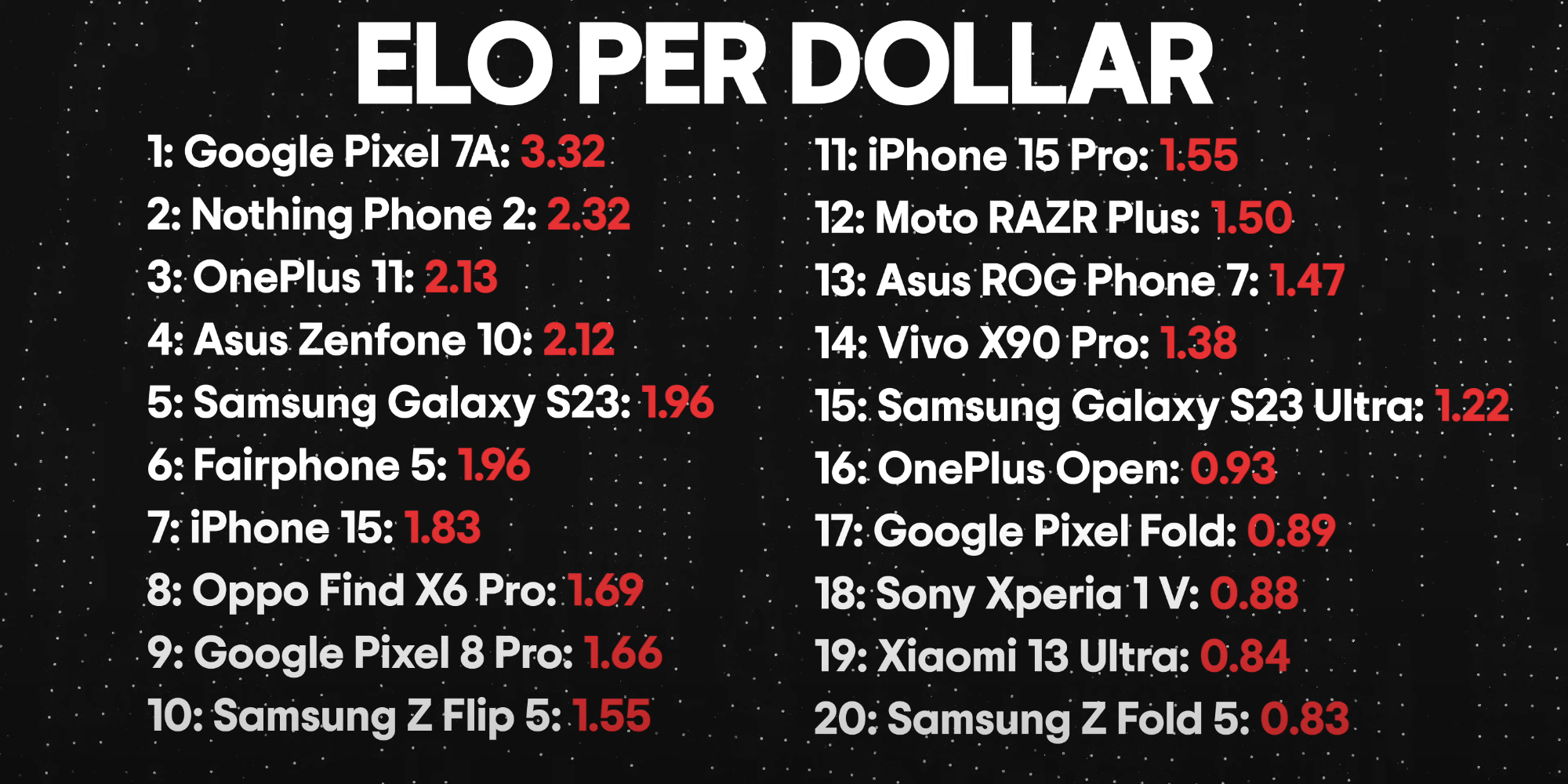

Marquez also prepared a "top for your money". Literally: to compile it, the smartphone's Elo rating was divided by the cost of the device in dollars. The first two places were taken by mid-level gadgets: the same Pixel 7a and Nothing Phone (2). It is followed by OnePlus 11 and Asus Zenfone 10.

It is noteworthy that Sony's flagship is no longer the worst in the top for its money: yes, it is expensive and performed terribly in one of the tests, skewing the average score, but Xiaomi 13 Ultra and Samsung Galaxy Z Fold 5 turned out to be even worse in this regard.

Of course, such tests are not able to give a complete picture of how a smartphone is shooting. For people who don't take pictures of people and use manual settings, such comparisons will be completely useless. But such tests with a large audience help to see what the average user prefers.