The RunwayML application has been released on the iPhone, giving access to a neural network for video processing Gen-1. With it, you can change the style of the video in a few seconds, replace objects or add new ones.

You can record videos through the app's camera or download existing videos from your smartphone. The service uses only the first 5 seconds of the video, so you should immediately cut out the desired piece from longer videos — for example, through the standard Photo application.

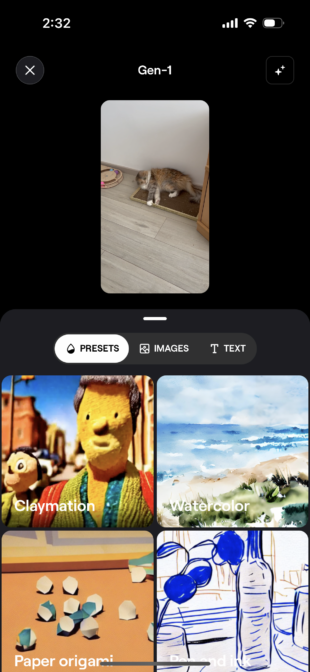

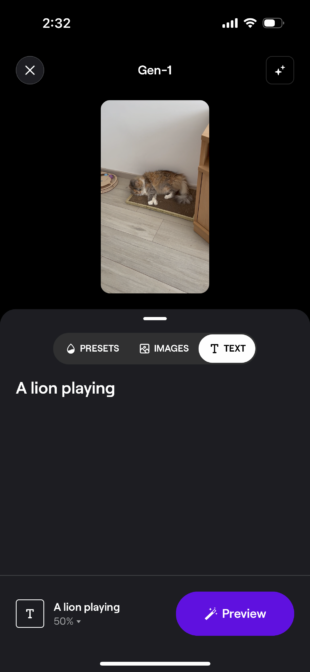

You can apply one of five ready-made styles to the video, upload an image to replace the object, or give a text description of what you want to see in the finished video. The degree of mixing of the new video with the source can be adjusted with a slider (by default, 50%).

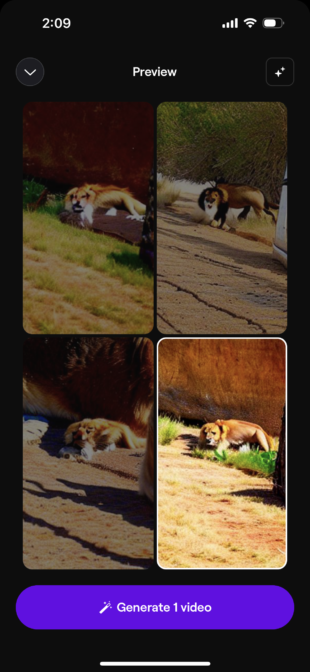

The application quickly generates four variants of the result, which you can generate. The render is fast: it takes 1-2 minutes.

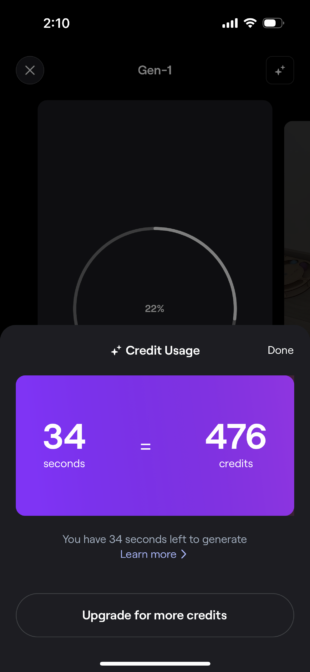

It is important to note that you will be able to use RunwayML only for test purposes. Each second of the generated video costs 14 credits, with a free tariff available to the user 525 (400 for registration and 125 when choosing a tariff). Without paying the tariff, it will not be possible to replenish the stock of credits — besides, in the free version there will be a small watermark on the finished video, which can be removed in the paid version.

The interface mentions the rapid support of Gen-2 for generating videos by text query and the ability to edit photos with the replacement of background, objects or style.

The release of the Android version of RunwayML has not yet been announced, the web version of Gen-2 is available to everyone at this link.